Research

Conference Publication – Upcoming 2026

January 25, 2026

An upcoming article titled, “Design-Driven Learning Reaches Parity with General-Purpose Pretraining: Evidence from the JONES-19 Cultural Design Dataset,” asks whether AI may acquire design or cultural competence without a “visual common sense” (e.g., ImageNet pretraining). This work is currently under review for the 2026 ACM Creativity & Cognition Conference that will take place in London, UK.

Long Abstract

Design and architectural archives represent specialized repositories of expert knowledge encoded in graphical formats—drawings, diagrams, patterns. The documented history of architecture and art contains numerous well-studied design artifacts, from ornamental motifs to furniture and building designs. When digitized, these cultural collections offer new opportunities at the intersection of Machine Learning (ML) and design scholarship. They provide a critical testbed for design-inspired ML challenges that may not arise with typical computer vision benchmarks, while simultaneously opening new avenues for understanding human intelligence in creative domains.

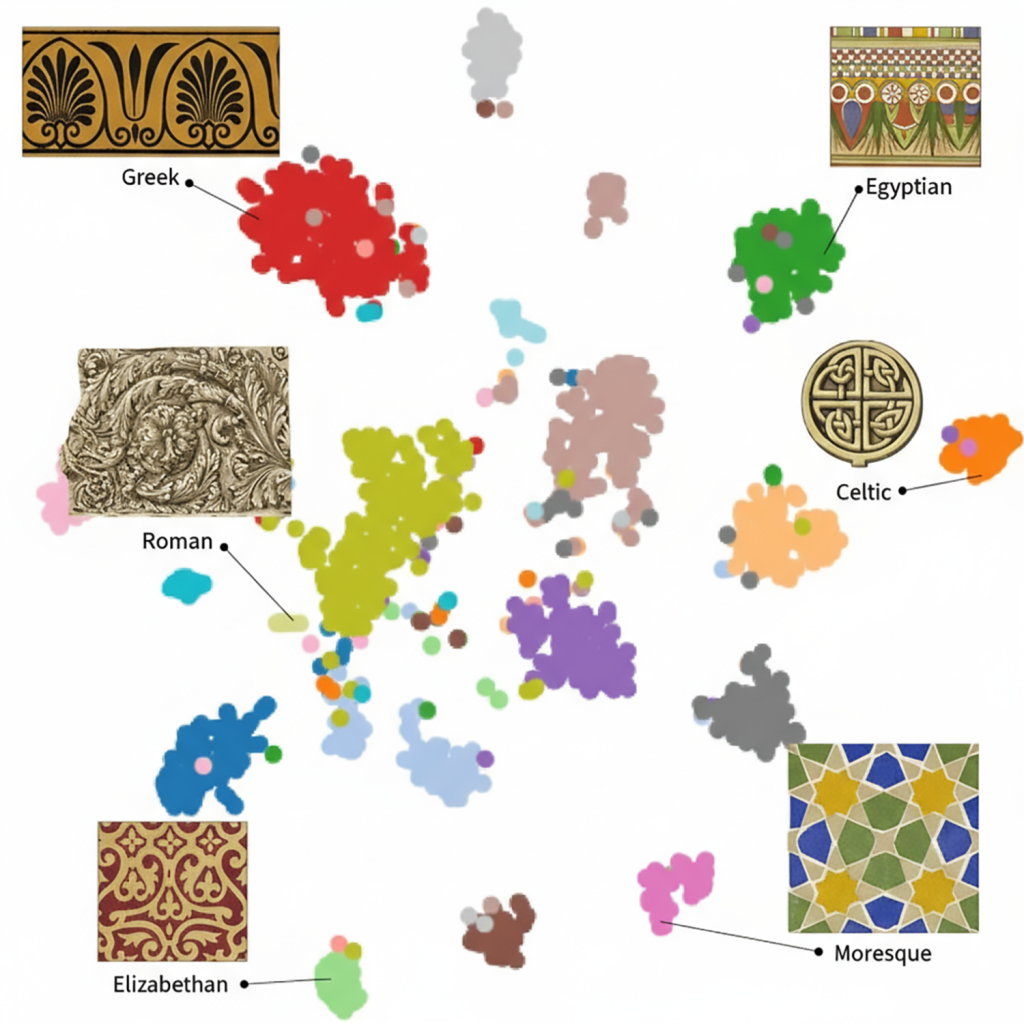

This paper examines how domain-general visual knowledge—acquired through ImageNet pretraining—influences the discriminative performance of Convolutional Neural Networks (CNNs) on JONES-19, a small-size dataset of ornament designs based on The Grammar of Ornament (1857) by Owen Jones [1, 2, 35]. To what extend is the “visual common sense” derived from millions of natural images (e.g., ImageNet) necessary to capture the visual representations inherent in a specialized, high-quality design collection of abstract ornament designs? We investigate this tension between domain-general priors and design-driven learning through the lens of JONES-19, specifically by comparing two training strategies: (a) leveraging ImageNet pretraining to provide visual common sense, and (b) learning from scratch solely on JONES-19. We devise controlled experiments with ResNet18 and ResNet50 to evaluate four conditions: with and without ImageNet-1K pretraining, and with and without multi-crop augmentation.

Our central question is: To what extent does ImageNet pretraining improve classification accuracy on specialized ornament data, and how does its impact compare to performance gains from extensive data augmentation alone? While domain-general priors improve performance, we find that learning-from-scratch augmented with multi-crop sampling effectively recovers these gains. This suggests that for highly structured design data, local design-driven representations provide a sufficient foundation for learning, challenging assumptions about the necessity of large-scale general-purpose pretraining.

These findings have practical implications for specialized domains: careful curation of smaller, high-quality datasets reflecting empirical and formal design principles may prove more effective than prioritizing dataset scale, as performance can be recovered through extensive local sampling strategies. Building on the original JONES-19 publication’s baseline benchmarks, this work advances our understanding of how models acquire and transfer visual knowledge across domains. We hope to motivate joint investigation by the design and ML communities into questions that lead toward more human-aligned intelligence inspired by design and architectural principles.

Co-authors:

Charles Zhou (CS & Economics, Harvard University)

Helen He (CS & East Asian Studies, Harvard University)

Stuart Shieber (Professor of Computer Science, Harvard University)

Downloads

Download Publisher’s Version (Open Access)

Download Preprint (Harvard DASH)