Research

Conference Publication – ACM KUI 2025

August 26, 2025

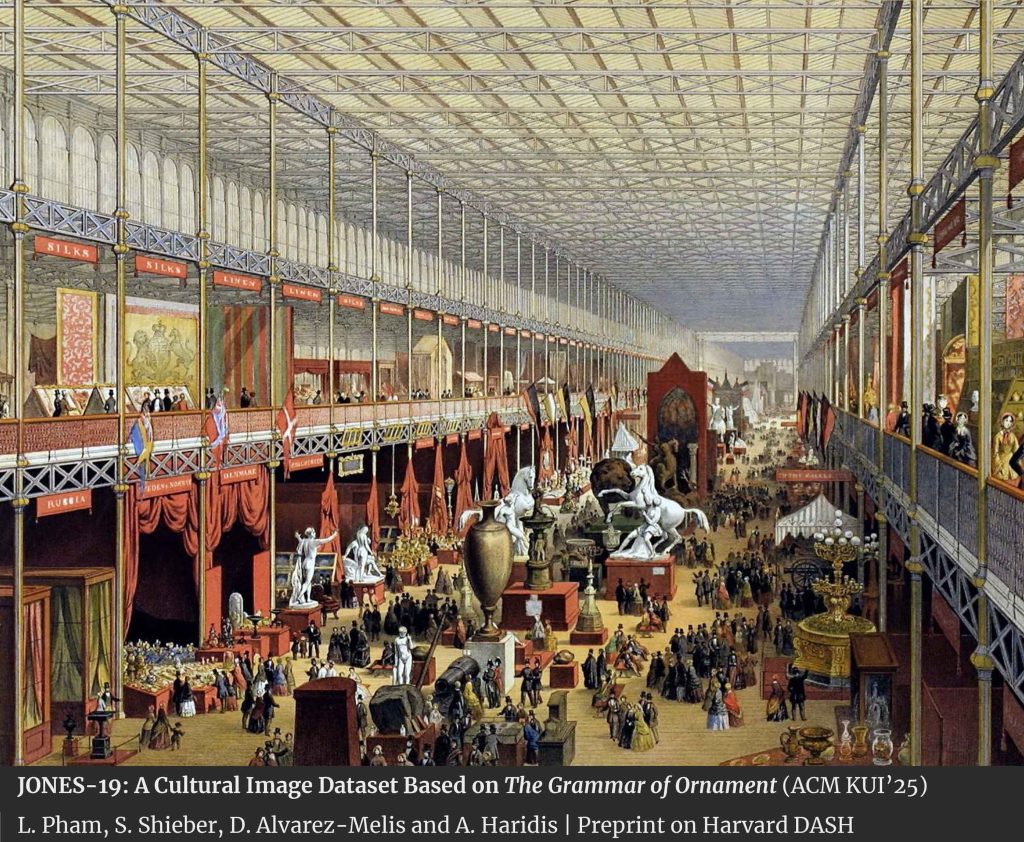

New peer-reviewed conference publication, “JONES-19: A Cultural Image Dataset Based on The Grammar of Ornament,” in the ACM International Conference on Culture and Computer Science. The conference is organized by HTW – University of Applied Sciences Berlin and Cluster of Excellence “Matters of Activity. Image Space Material,” Humboldt-Universität zu Berlin, and takes place at the Kulturforum Berlin, Berlin, Germany, in September 2025.

Long Abstract

The limited use and success of machine learning (ML) methods on design or cultural collections can be attributed to issues related to access and quality of available data sources. The history of architecture and art, however, contains numerous well-documented instances of design artifacts – from ornaments and patterns to building and furniture designs. The digitized or “virtual” manifestations of these documented artifacts have the potential to introduce new important frontiers for discriminative or generative modeling research in ML as well as for the analysis or generation of design and cultural collections.

This paper introduces JONES-19, a new high-quality image dataset documenting images of cultural ornament designs. The images and their annotations are based on an open access archive of The Grammar of Ornament (London, 1856), an influential 19th-century book of ornament designs by the British architect and designer Owen Jones. The ornament designs originate from nineteen human cultures, including Indigenous Tribes, Greek, Egyptian, and Chinese.

Because JONES-19 is a design-inspired dataset that documents a specialized type of design object (i.e., ornament designs), it differs significantly from large datasets that document common objects in-context or natural scenes. It also differs from large galleries of artworks from different periods, styles or artists typically found in the digitized archives of museums and libraries. The specificity that characterizes JONES-19 can serve as a new benchmark for various research fields, such as fine-grained visual recognition, data-efficient learning, domain adaptation, art-historical research, architectural style analysis, and cultural heritage.

In this paper, we describe the curation of the JONES-19 dataset and report a baseline classification benchmark. We evaluate the suitability of the dataset for training classifiers and expose insights into inter-class relationships – particularly cultural similarities – by examining patterns in misclassification errors.

Our aim is to introduce an image dataset that poses important challenges at the intersection of ML and art and design: a small sample size of 1,901 images; a specialized class of human-designed artifacts rather than natural scenes or common objects in-context; imbalanced class distribution and high visual diversity across the nineteen cultures; fine-grained distinctions across per-class images and across classes, characterized by fine details involving line patterns, reliefs, and colors. In addition to well-established research problems – image localization, segmentation, classification, and representation learning – our dataset raises more concrete learning challenges related to design. Two such challenges are, learning the symmetry groups that characterize the ornament designs of a culture and modeling design similarities between cultures that may ground art-historical knowledge or evidence about design exchanges.

JONES-19 can serve as a platform for investigating cross-cultural design exchanges computationally, to delve deeper into the intelligence of discerning one design from another, a human skill that remains central in architecture, art or design.

Funding provided by the Harvard Data Science Initiative.

Co-authors:

Linh Pham (Masters, Design Engineering track, Harvard University)

David Alvarez-Melis (Assistant Professor of Computer Science, Harvard University)

Stuart Shieber (Professor of Computer Science, Harvard University)

Downloads

Download Publisher’s Version (Open Access)

Download Preprint (Harvard DASH)

Dataset on HuggingFace